YouCam API (v1.8)

Download OpenAPI specification:

Last modified: Feb. 24, 2026 d4c84a1

The YouCam APIs are series of AI effects that let you beautify your photos, as well as generate amazing aesthetic creations beyond human imagination. Let the magic begin.

PLEASE NOTE that by reading this API documentation, or by setting up code pursuant to the instructions in this API documentation, you acknowledge it adheres to Perfect Corp's Privacy policy, and Terms of Service. And If you have any issue, please contact: YouCamOnlineEditor_API@perfectcorp.com

YouCam API is a powerful and easy-to-use AI platform that provides beautiful and true-to-life visual effects thanks to the latest AI technology. This API document will briefly introduce how to integrate these awesome AI effects into your business. YouCam API are standard RESTful APIs to let you easily integrate to your website, e-commerce platforms, iOS/Android APP, applets, mini-programs, and more.

The YouCam APIs are built on top of RESTful web APIs. The API server is the host of all APIs. Once you complete the authentication, you can start your AI tasks through the API Server.

YouCam API server:

https://yce-api-01.makeupar.com

To ensure fair usage and prevent abuse, our API implements rate limiting. There are two types of rate limits:

Per IP Address

Each IP address is allowed a maximum of 250 requests per 300 seconds with 5 queries per second(QPS). If this limit is exceeded, subsequent requests will receive a

429 Too Many Requestserror.Per Access Token

Each access token is allowed a maximum of 250 requests per 300 seconds with 5 queries per second(QPS). If this limit is exceeded, subsequent requests will receive a

429 Too Many Requestserror.

Both conditions must be met for a request to be accepted. If either condition is not met, the request will receive a 429 Too Many Requests error. Please note that each query related to unit will be processed real-time. Other APIs can use the access token util expired.

It is recommended that you handle rate limit errors gracefully in your application by implementing the appropriate backoff and retry mechanisms.

Any files you upload are stored on our server for 24 hours, and then they're automatically deleted. Processed results are retained for 24 hours after completion.

Warning: Even though those result files are still good for 7 days, the link to download them might only be active for about two hours after they're created. If that download link expires, you can still access the file using its file ID – you can use that ID with another feature to get it.

| AI Photo Editing | Supported Dimensions | Supported File Size | Supported Formats |

|---|---|---|---|

| AI Photo Enhance | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Photo Colorize | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Photo Color Correction | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Photo Lighting | 4096x4096 (long side <= 4096, short side >= 256) | < 10MB | jpg/jpeg/png |

| AI Image Extender | Input: long side <= 4096, Output: short side <= 1024, long side <= 2048 | < 10MB | jpg/jpeg/png |

| AI Replace | Input: long side <= 4096, Output: short side <= 1024, long side <= 2048 | < 10MB | jpg/jpeg/png |

| AI Object Removal | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Image Generator | Input: long side <= 4096, Output: long side <= 1024 | < 10MB | jpg/jpeg/png |

| Al Photo Background Removal | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Photo Background Blur | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Photo Background Change | Input: long side <= 4096, Output: long side <= 4096 | < 10MB | jpg/jpeg/png |

| AI Portrait | Supported Dimensions | Supported File Size | Supported Formats |

|---|---|---|---|

| AI Avatar Generator | Input: long side <= 4096, Output: long side <= 1024 | < 10MB | jpg/jpeg/png |

| AI Face Swap | 4096x4096 (long side <= 4096), single face only, need to show full face | < 10MB | jpg/jpeg/png |

| AI Video Editing | Supported Dimensions | Supported File Size | Supported Formats |

|---|---|---|---|

| AI Video Enhance | input: long side <= 1920; output: max 2x input resolution, up to 60 sec, 30 FPS, 8 bit | <100MB | container: mov, mp4; video codec: MPEG-4, H.264 AVC; audio codec: aac, mp3; |

| AI Video Face Swap | input: long side <= 4096; output: 1280x720, 30 FPS, up to 30 sec, 8 bit, single face only | <100MB | container: mov, mp4; video codec: MPEG-4, H.264 AVC; audio codec: aac, mp3 |

| AI Video Style Transfer | input: long side <= 4096; output: long side <= 1280, 16 FPS, up to 30 sec, 8 bit | <100MB | container: mov, mp4; video codec: MPEG-4, H.264 AVC; audio codec: aac, mp3 |

- Successful responses (100 - 399)

- Client error responses (400 - 499)

- Server error response (500 - 599)

| General Error Code | Description |

|---|---|

| exceed_max_filesize | The input file size exceeds the maximum limit |

| invalid_parameter | The parameter value is invalid |

| error_download_image | There was an error downloading the source image |

| error_download_mask | There was an error downloading the mask image |

| error_decode_image | There was an error decoding the source image |

| error_decode_mask | There was an error decoding the mask image |

| error_download_video | There was an error downloading the source video |

| error_decode_video | There was an error decoding the source video |

| error_nsfw_content_detected | NSFW content was detected in the source image |

| error_no_face | No face was detected in the source image |

| error_pose | Failed to detect pose in the source image |

| error_face_parsing | Failed to perform face parsing on the source image |

| error_inference | An error occurred in the inference pipeline |

| exceed_nsfw_retry_limits | Retry limits exceeded to avoid generating NSFW image |

| error_upload | There was an error uploading the result image |

| error_multiple_people | Multiple people were detected in the source image |

| error_no_shoulder | Shoulders are not visible in the source image |

| error_large_face_angle | The face angle in the uploaded image is too large |

| error_hair_too_short | The input hair is too short |

| error_unexpected_video_duration | The video duration does not match the expected duration |

| error_bald_image | The input hairstyle is bald |

| error_unsupport_ratio | The aspect ratio of the input image is unsupported |

| unknown_internal_error | Other internal errors |

First, you need to register a YouCam API account for free at https://yce.makeupar.com/. You can then purchase or subscribe to get your units to use the service. You can see your subscription plan, pay as you go units, and your usage record at https://yce.makeupar.com/api-console/en/api-keys/. You can go to the API Key tab under your account page to generate and maintain your API keys to start using the YouCam API.

Overview

Starting from API version v1.5, we have introduced a simplified V2 API to streamline integration processes.

Scope: Starting from API version v1.7, all YouCam APIs are supported V2 API.

Note: V2 API is designed for workflow simplification only. It does not introduce advanced functionality. Both V1 APIs and V2 APIs use the same underlying AI models.

V1 API legacy API document: https://yce.makeupar.com/document/v1.x/index.html

Key Changes in V2 API

1. Endpoint Reduction

There is no need to call a separate authentication API to complete the authentication process.

V1 API Endpoints:

POST /s2s/v1.0/client/auth– AuthenticationPOST /s2s/v1.1/file/ai-task– Upload a filePOST /s2s/v1.0/task/ai-task– Run an AI taskGET /s2s/v1.0/task/ai-task– Get the result

V2 API Endpoints:

POST /s2s/v2.0/file/ai-task– Upload a file or provide a file URLPOST /s2s/v2.0/task/ai-task– Run an AI taskGET /s2s/v2.0/task/ai-task– Get the result

Warning: Ensure that you replace the placeholder

ai-taskwith the exact AI feature name supported by the API (for example,skin-analysis,cloth,hair-style,makeup-vto, orskin-tone-analysis). Using an incorrect or generic placeholder will result in an invalid request or API error. Always refer to the latest API specification for the list of valid feature identifiers.

2. Authentication

- V1 API: Requires explicit call to Authentication endpoint.- V2 API: No separate authentication endpoint. - Include your API key in the request header using Bearer Token:

You can find your API Key at https://yce.makeupar.com/api-console/en/api-keys/.Authorization: Bearer YOUR_API_KEY

3. Image Input

- Supports both file upload and file URL as input sources.

4. Polling Mechanism

- Processed results are retained for 24 hours after completion.

- No need for short-interval polling.

- Flexible polling intervals within the 24-hour window.

- Important: Polling is still required to check task status, as execution time is not guaranteed.

5. JSON Structure Simplification

- List Style API now uses a simplified JSON parameter structure.

- AI Hairstyle Generator: https://yce-api-01.makeupar.com/s2s/v2.0/task/template/hair-style

- AI Clothes: https://yce-api-01.makeupar.com/s2s/v2.0/task/template/cloth

Summary

- V2 API = Simplified workflow, same AI models.- Reduced endpoints, easier authentication, flexible input options, improved polling, and cleaner JSON.

v1.8 – 2026-01-28

Overview

- Enhanced AI Skin Analysis for higher resolution input (up to 4096 pixels from previous 1920), enabling more detailed skin evaluation.

- Customizable hairstyle generation using reference images via the AI Hairstyle Generator.

- AI Clothes API

- Supports automatic detection of garment categories by introducing a new parameter

autoforgarment_category. - A new parameter,

change_shoes, is introduced to manage whether shoes should be swapped. This allows users to create tasks (/s2s/v2.0/task/cloth) to handle category changes efficiently. - Additionally, it confirms the addition of a

shoesgarment category undergarment_category. - Performance enhancements and bug fixes.

- Supports automatic detection of garment categories by introducing a new parameter

- JS Camera Kit:

- Supports new types ('facereshape')

- Jewelry & Watch UI Optimization

- iPad camera optimization

- Integrated support for MCP with access to 26 APIs.

- See MCP Capabilities

New Features

Fashion API

- AI Nail Virtual Try-On: Experience lifelike nail designs with a high-resolution virtual try-on feature, allowing you to see how different styles and colors look on your hands.

- AI Face Reshape: Utilize advanced facial analysis to reshape your face naturally, enhancing symmetry and overall appearance with AI-driven adjustments.

- AI Body Reshape: Achieve a more balanced body shape by applying intelligent reshaping techniques that refine proportions and contours.

v1.7 – 2025-12-29

Overview

- All YouCam AI APIs now support the simplified V2 API structure.

- AI Skin Analysis API optimisation:

- Accepts any number of skin concerns as input. You may specify as many concerns as required. However, SD and HD Skin Analysis cannot be mixed in a single request. The call must include either all SD concerns or all HD concerns.

- Returns result images and scores directly in the output response rather than in a single ZIP file, allowing you to parse the scores first and download detection masks separately.

- JS Camera Kit:

- Supports new types ('ring', 'wrist', 'necklace', and 'earring')

- MCP support: See MCP

- Supports common MCP clients.

- Now supports 18 APIs.

New Features

Fashion API

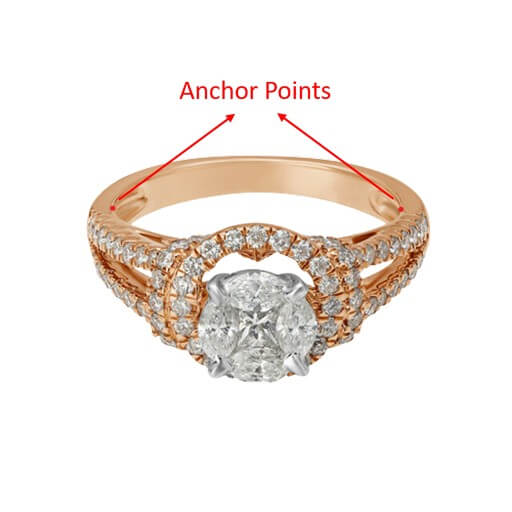

Jewelry & Watch API

- AI Ring Virtual Try-On

- AI Bracelet Virtual Try-On

- AI Watch Virtual Try-On

- AI Earring Virtual Try-On

- AI Necklace Virtual Try-On

Q: What does 'Unit' mean, and how is it used? A: Please note that credits are exclusively used within the YouCam Online Editor UI, whereas units are designated solely for AI API usage. Throughout this document, all references to 'credit' or 'unit' pertain to the Unit.

Please check the page https://yce.makeupar.com/ai-api for details on how many units each AI feature consumes.Q: Why am I getting InvalidTaskId error? A: You will get a InvalidTaskId error once you check the status of a timed out task. So, once you run an AI task, you need to polling to check the status within the polling_interval until the status become either success or error.

Q: What is client_id, client_secret and id_token? A: Your API Key is the client_id and the Secret key is the client_secret. And please follow the Quick start guide to get the id_token to complete the authentication process.

For more information and questions, please refer to our blog page and the FAQ page. Or send an e-mail to YouCamOnlineEditor_API@perfectcorp.com. Our customer success team are very happy to help. ;)

Q: How to uplaod an image through curl? A: curl --location --request PUT '{url_api_response}' --header '{headers_in_api_response}' --data-binary @'{local_file_path}'

curl --location --request PUT 'https://example.com/sample-result-url' --header 'Content-Type: image/jpg' --header 'Content-Length: 50000' --data-binary @'./test.jpg'Q: What platforms does the AI API support? Can it be used on mobile devices? A: The AI API works seamlessly across different platforms. Since it's built on a standard RESTful API, you can use it for server-to-server communication, on mobile devices, or in a web browser - whatever best fits your needs.

Q: Is it possible to use the AI API with Flutter on both Android and iOS? A: Absolutely. Since AI APIs are standard RESTful APIs, you can access them using Flutter's built-in HTTP APIs to make requests. Here are some official Flutter documents on making network queries.

And here’s a simple way to call a http GET with Bearer Authentication using Flutter’s http package:

import 'dart:convert'; import 'package:http/http.dart' as http; Future<void> fetchData() async { String token = "your_bearer_token_here"; // Replace with your actual token final response = await http.get( Uri.parse("https://yce-api-01.makeupar.com/s2s/v1.0/task/ai-task"), // Replace the `ai-task` with the AI feature you intend to use. headers: { "Content-Type": "application/json", "Authorization": "Bearer $token", }, ); if (response.statusCode == 200) { print("Data fetched successfully: ${response.body}"); } else { print("Failed to fetch data: ${response.statusCode}"); } }Key Steps to Auth:

- Retrieve the Token – You can store and retrieve the token using SharedPreferences or another secure storage method.

- Include the Token in Headers – Use "Authorization": "Bearer $token" in the request headers.

- Handle Expired Tokens – If the API returns a 401 InvalidAccessToken error, refresh the token if needed.

Q: API key is the API key, Where can I find the secret key? A: The secret key is created only when you first generate it. So make sure to save it right away, you won't be able to see it again later.

Q: Is it possible to integrate AI APIs into your website using Wix? A: We do not currently have an official integration guide for using AI APIs with Wix. However, the RESTful API itself does not impose any restrictions on the platform, provided it supports standard web queries.

We strongly recommend to begin by reading the official documentation provided by Wix: https://dev.wix.com/docs/develop-websites/articles/get-started/integrate-with-3rd-parties

Wix offers several methods for integrating with third-party APIs, primarily through its Velo development platform:

Fetch API: This is the most common method and an implementation of the standard JavaScript Fetch API. It allows you to make HTTP requests to external APIs from your Wix site's frontend or backend code. Backend calls: Recommended for security (especially for APIs requiring keys) and to avoid CORS issues. Use the Secrets Manager to securely store API keys. Frontend calls: Possible but less secure and may encounter CORS restrictions.

npm Packages: Velo supports the use of approved npm packages, allowing you to leverage a wide array of prebuilt JavaScript modules to extend your site's functionality with third-party features.

Service Plugins (formerly SPIs/Custom Extensions): These enable you to inject custom logic or integrate third-party services directly into Wix's business solutions (e.g., Wix Stores, Wix Bookings). Service plugins allow you to customize specific parts of existing app flows or integrate external services for functionalities like custom shipping rates, dynamic pricing, or alternative payment providers.

HTTP Functions: You can expose your site's functionality as an API, allowing third-party services to call your Wix site''s backend functions and interact with your site's data or logic.

Steps for Integration (using Fetch API as an example):

Store API Keys: If the third-party API requires authentication, store sensitive credentials like API keys securely in Wix's Secrets Manager. Import wix-fetch: In your Velo backend or frontend code, import the wixFetch module.

import { fetch } from 'wix-fetch';Make API Calls: Use the fetch function to send requests to the third-party API, including necessary headers and body data.

export async function getWeatherData() { const apiKey = await getSecret("yourApiKeyName"); // Retrieve from Secrets Manager const response = await fetch(`https://api.weatherapi.com/v1/current.json?key=${apiKey}&q=London`, { method: 'GET', headers: { 'Content-Type': 'application/json' } }); const data = await response.json(); return data; }Handle Responses: Process the data received from the API and integrate it into your site's design or functionality.

Q: Is it possible to integrate AI APIs into WooCommerce? A: We do not currently have an official integration guide for using AI APIs with WooCommerce. However, the RESTful API itself does not impose any restrictions on the platform, provided it supports standard web queries.

We strongly recommend to begin by reading the official documentation provided by WooCommerce: https://woocommerce.com/document/woocommerce-rest-api/

Integrating third-party APIs with WooCommerce enhances store functionality and streamlines operations. This can be achieved through various methods:

Utilizing WooCommerce REST API:

WooCommerce provides a robust REST API that allows external applications to interact with your store data. You can create API keys with specific permissions (Read, Write, or Read/Write) in WooCommerce > Settings > Advanced > REST API. This enables you to automate tasks like inventory syncing, order processing, customer data synchronization, and more, by building custom integrations or using third-party services that leverage this API.

Employing Plugins:

Many WordPress and WooCommerce plugins are designed specifically for integrating with popular third-party services like payment gateways, shipping carriers, CRM systems, and accounting software. Plugins often offer pre-built integrations, simplifying the setup process and reducing the need for custom coding. Examples include plugins for specific payment processors (e.g., Stripe, PayPal), shipping solutions (e.g., FedEx, USPS), or general API integration tools like WPGet API.

Custom Code and Webhooks:

For unique integration needs or when a suitable plugin isn't available, you can implement custom code within your WordPress theme or a custom plugin. This involves using WordPress/WooCommerce hooks and filters to trigger API calls at specific events (e.g., woocommerce_payment_complete for post-purchase actions). Webhooks can also be used to send real-time data from WooCommerce to a third-party service when certain events occur (e.g., new order, product update).

Invalid TaskId Error Why: You’ll receive an InvalidTaskId error if you attempt to check the status of a task that has timed out. Therefore, once an AI task is initiated, you’ll need to poll for its status within the polling_interval until the status changes to either success or error. Solution: To avoid the task becoming invalid, it’s necessary to implement a timed loop that queries the task status at regular intervals within the allowed polling window.

500 Server Error / unknown_internal_error A: Important: Simply calling the File API does not upload your file. You must manually upload the file to the URL provided in the File API response. That URL is your upload destination, make sure the file is successfully transferred there before proceeding.

Before calling the AI API, ensure your file has been successfully uploaded. Use the File API to retrieve an upload URL, then upload your file to that location. Once the upload is complete, you'll receive a file_id in the response, this ID is what you'll use to access AI features related to that file.404 Not Found error when using AI APIs A: Important: Simply calling the File API does not upload your file. You must manually upload the file to the URL provided in the File API response. That URL is your upload destination, make sure the file is successfully transferred there before proceeding.

Before calling the AI API, ensure your file has been successfully uploaded. Use the File API to retrieve an upload URL, then upload your file to that location. Once the upload is complete, you'll receive a file_id in the response, this ID is what you'll use to access AI features related to that file.The API response returns the same style ID on the Postman A: The is because Postman (Pretty view) and Chrome use JavaScript to parse JSON responses. In JavaScript, numbers larger than 9007199254740991 (2^53-1) cannot be represented precisely. They are rounded to the nearest representable value, which makes different IDs appear identical (e.g., 219691778809271815 → 219691778809271800).

- Precision Loss in JavaScript/JSON Error Handling

- Cause: JavaScript's Number type cannot safely handle integers beyond 2^53 - 1, leading to silent truncation or rounding.

- Solution: Use a JSON parser like json-bigint to treat IDs as strings and retain full precision.

- Precision Loss in JavaScript/JSON Error Handling

Beard Authentication 400 Error A: When using the V2 API, you must include your API key in every request by adding a Bearer authorization header. Failure to do so will result in authentication errors. Valid Request Header Format

Authorization: Bearer YOUR_API_KEYCommon Issues

Missing Bearer Prefix The

Authorizationheader must start withBearerfollowed by the API key. Example:Authorization: YOUR_API_KEYUnnecessary Characters Do not include angle brackets

<or>around the API key. These characters are not required and will cause the request to fail. Example:Authorization: Bearer <YOUR_API_KEY>Incorrect API Key Ensure that the API key provided in the

Authorizationheader is correct and active.

Check your unit details and usage history. Please note that credits are exclusively used within the YouCam Online Editor UI, whereas units are designated solely for AI API usage. Throughout this document, all references to 'credit' or 'unit' pertain to the Unit.

Request samples

- curl

- Node.js

- JavaScript

- PHP

- Python

- Java

curl --request GET \ --url https://yce-api-01.makeupar.com/s2s/v1.0/client/credit \ --header 'Authorization: Bearer <access_token for v1, API Key for v2>'

Response samples

- 200

{- "status": 200,

- "results": [

- {

- "id": 13105541279084536,

- "type": "SubsToken",

- "amount": 10,

- "expiry": 1704789135000

}

]

}View unit history

Authorizations:

query Parameters

| page_size | integer Example: page_size=20 Number of results to return in a page. Valid value should be between 1 and 30. Default 20. |

| starting_token | string Example: starting_token=13045969587275114 Token for current page. Start with |

Responses

Request samples

- curl

- Node.js

- JavaScript

- PHP

- Python

- Java

curl --request GET \ --url 'https://yce-api-01.makeupar.com/s2s/v1.0/client/credit/history?page_size=20&starting_token=13045969587275114' \ --header 'Authorization: Bearer <access_token for v1, API Key for v2>'

Response samples

- 200

- 400

- 401

{- "status": 200,

- "result": {

- "next_token": 13045969587275114,

- "history": [

- {

- "id": 38173479337460984,

- "timestamp": 1703146067000,

- "action": "photo_enhance",

- "target_id": "7TWFkuE2Z9uR0NOxSmQ1rh4PJ5Zx7YJ5U9SAz51q0QHVwMP+Jb1XbjPsf7ZgUgLY",

- "info": {

- "credits": [

- {

- "id": 24409059381740548,

- "amount": -2

}, - {

- "id": 27297116883780870,

- "amount": 0

}

], - "dst_actions": "[ \"hd_wrinkle\", \"hd_pore\", \"hd_texture\" ]"

}

}

]

}

}Webhook

Webhooks allow your application to receive asynchronous notifications when an AI task completes with either a success or error status. Notifications are sent to an HTTP endpoint you control and follow the Standard Webhooks Specification.

Webhook Secret

Construction of Webhook Secret

We use HMAC-SHA256 signature schme webhook secret, and webhook secret is base64 encoded, prefixed with whsec_ for easy identification.

Example webhook secret:

whsec_NDQzMzYxNzkzMzE0NjYyNDM6OTIxOTcwNDIxODQ

Implement Webhook with Standard Webhooks Library

We strongly recommend using an official implementation of the Standard Webhooks Library, and you do not have to worry about the signature validation details.

Using an official implementation also ensures secure and correct signature validation.

Implement Webhook by Yourself

Please carefully understand the construction of the webhook secret, refer to Symmetric part of Signature scheme.

When you are trying to sign signature input, please remove the whsec_ prefix and base64 decode the remaining string to obtain the actual bytes of secret to do HMAC-SHA256 hash.

Webhook Request Example

POST https://yourdomain.com/webhook-endpoint

Content-Type: application/json

webhook-id: msg_1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4:1761112848

webhook-timestamp: 1761112900

webhook-signature: v1,vyVNWrjoZcBK1JXrFGkdDKK2slo5+Q5yfzpkHmqO5R0=

{

"created_at": 1761112848,

"data": {

"task_id": "1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4",

"task_status": "success"

}

}

HTTP Headers

webhook-id

A unique identifier for the webhook delivery. This value remains consistent across retries and should be used for idempotency handling.

webhook-timestamp

The Unix epoch timestamp (seconds) when the webhook was sent.

webhook-signature

'v1' followed by a comma (,), followed by the base64 encoded HMAC-SHA256 signature.

'v1' indicates the version of the signature scheme, and is currently the only supported version.

The base64 encoded HMAC-SHA256 signature is the result of signing signature input using your webhook secret.

Signature input format:

{webhook-id}.{webhook-timestamp}.{raw-minified-json-body}

Example signed content:

msg_1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4:1761112848.1761112900.{"created_at":1761112848,"data":{"task_id":"1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4","task_status":"success"}}

Request Body

created_at

Unix epoch timestamp (seconds) indicating when the task completed.

data

Contains the event payload.

| Field | Description |

|---|---|

task_id |

The task identifier returned when the task was created. Use this ID to query the final task result. |

task_status |

Task completion status. Possible values: success, error. |

Webhook Integration Guide

Prepare a webhook endpoint on your server. Ensure the endpoint accepts

POSTrequests and is accessible via HTTPS.Create a webhook in the API Console.

Run an AI task and record the

task_id.Process webhook notifications using the

task_idto retrieve task results.

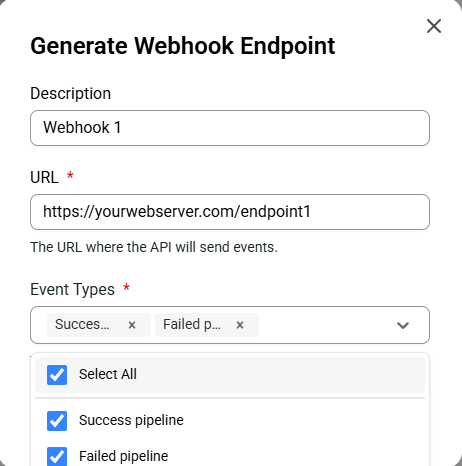

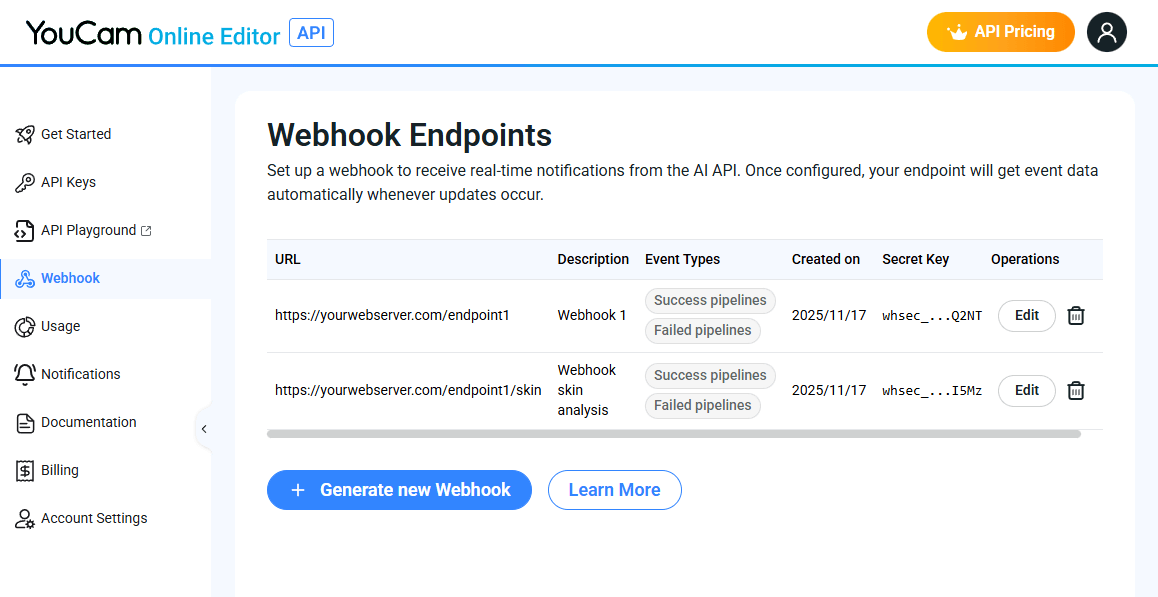

Creating a Webhook Endpoint

Visit the API Console's Webhook Management page:

https://yce.makeupar.com/api-console/en/webhook/

1. Locate the Webhook Section

2. Create a New Webhook Endpoint

You may configure up to 10 webhook endpoints concurrently.

3. Secure Your Webhook Secret

Your webhook secret is used to validate the webhook-signature.

Keep it safe and never expose it publicly.

4. Manage Existing Webhooks

Handling Webhook Requests on Your Server

We recommend using an official implementation of the Standard Webhooks Library:

This ensures secure and correct signature validation.

You can also test or debug payloads using the Standard Webhooks Simulator:

https://www.standardwebhooks.com/simulate

Verifying Webhook Signatures

Consider the webhook request example:

POST https://yourdomain.com/webhook-endpoint

Content-Type: application/json

webhook-id: msg_1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4:1761112848

webhook-timestamp: 1761112900

webhook-signature: v1,vyVNWrjoZcBK1JXrFGkdDKK2slo5+Q5yfzpkHmqO5R0=

Request body:

{

"created_at": 1761112848,

"data": {

"task_id": "1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4",

"task_status": "success"

}

}

Signature input format will be like:

{webhook-id}.{webhook-timestamp}.{raw-minified-json-body}

Example signed content:

msg_1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4:1761112848.1761112900.{"created_at":1761112848,"data":{"task_id":"1eWPv9cWJCnEP99UJncmVJ6KjK_xVXRhZPe_eSGnRNbLlXEPjiG3gb3Usg9le3_4","task_status":"success"}}

Veriry HMAC-SHA256 encrypted signed content using your webhook secret key with the received webhook-signature. Example:

v1,vyVNWrjoZcBK1JXrFGkdDKK2slo5+Q5yfzpkHmqO5R0=

Overview of MCP

The Model Context Protocol (MCP) is a lightweight, JSON‑based wrapper that enables client applications (e.g., Cursor, Copilot in VS Code, Claude for Desktop) to invoke the YouCam AI services without dealing with low‑level HTTP details.

Key benefits: By adding a single entry to MCP configuration JSON file and supplying your YouCam API key, any MCP‑compatible client (Cursor, Copilot in VS Code, Claude for Desktop) can instantly access the full suite of YouCam AI services - skin analysis, cloth virtual try‑on, hair styling, fashion rendering, and more functions. The client abstracts request formatting, authentication handling, and asynchronous polling, allowing developers to focus on workflow integration rather than low‑level API mechanics.

1. Prerequisites

- An MCP‑compatible client installed (Cursor, Copilot in VS Code, Claude for Desktop, etc.).

- Network access to

https://mcp-api-01.makeupar.com. - A valid YouCam API key (see next Section).

The client itself already implements request/response serialization and polling logic; no additional code is required from the developer.

2. Obtaining an API Key

- Navigate to the API‑key console: https://yce.makeupar.com/api-console/en/api-keys/

- Create a new key or copy an existing one.

- Store the key securely (environment variable, secret manager, etc.).

Example environment variable (adjust for your deployment):

YOUR_API_KEY=your‑api‑key

3. Configuring the MCP configuration JSON file

MCP clients read a JSON configuration file named mcp.json. Add an entry for the YouCam MCP server as shown below:

{

"mcpServers": {

"youcam-api-mcp": {

"url": "https://mcp-api-01.makeupar.com/mcp",

"headers": {

"Authorization": "Bearer YOUR_API_KEY"

}

}

}

}

Configuration steps

| Step | Action |

|---|---|

| 1. Setup MCP configuration JSON file | Put the file in the root of your project or in the client‑specific configuration directory (refer to each client’s documentation). |

| 2. Replace placeholder | Substitute YOUR_API_KEY with the value obtained in Section 3, or reference an environment variable (${YOUR_API_KEY}) if supported by the client. |

| 3. Verify connectivity | Open the client UI and confirm that “YouCam API MCP” appears as a selectable service. |

The configuration is static; any change to the key requires a client reload.

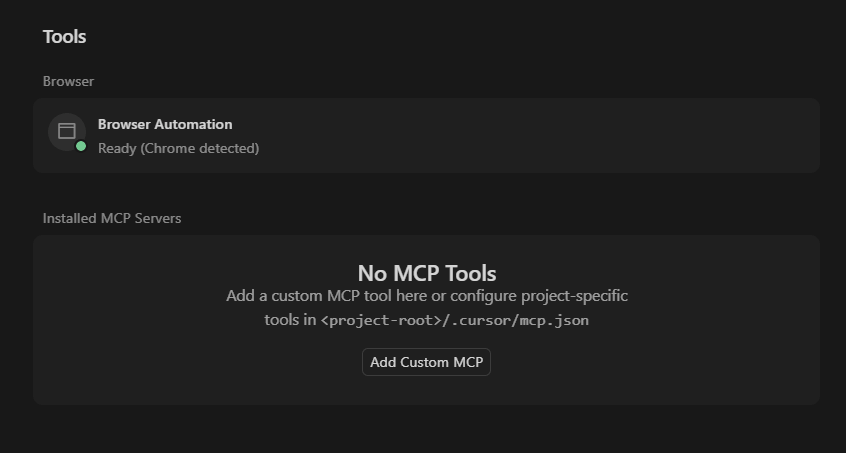

Integration with Popular MCP‑Aware Clients

Cursor

Open Settings → Tools & MCP → Add Custom MCP

Add settings into mcp.json file

{

"mcpServers": {

"youcam-api-mcp": {

"url": "https://mcp-api-01.makeupar.com/mcp",

"type": "http",

"headers": {

"Authorization": "Bearer YOUR_API_KEY"

}

}

}

}

Navigate to Cursor Settings → Tools & MCP

Enable or Disable the desired tools in 'Installed MCP Servers' section

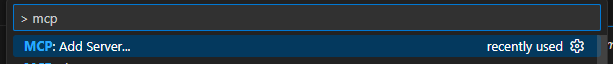

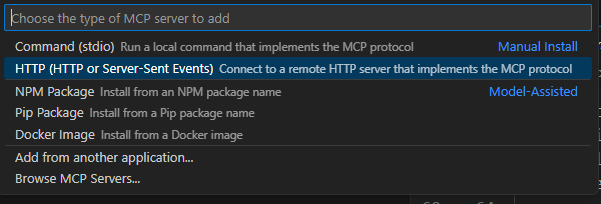

Copilot in VS Code (Visual Studio Code)

Copilot in VS Code option 1:

> MCP: Add Server

choose

HTTP

enter the server url

name the mcp server

header setting: fill in the api token

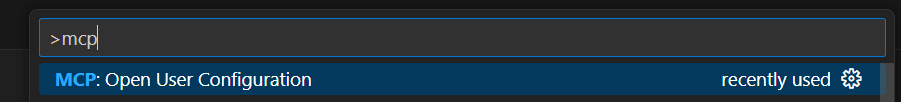

Copilot in VS Code option 2:

> MCP: Open User Configuration

Update the youcam-api-mcp setting to

mcp.json

{

"servers": {

"youcam-api-mcp": {

"url": "https://mcp-api-01.makeupar.com/mcp",

"type": "http",

"headers": {

"Authorization": "Bearer YOUR_API_KEY"

}

}

}

}

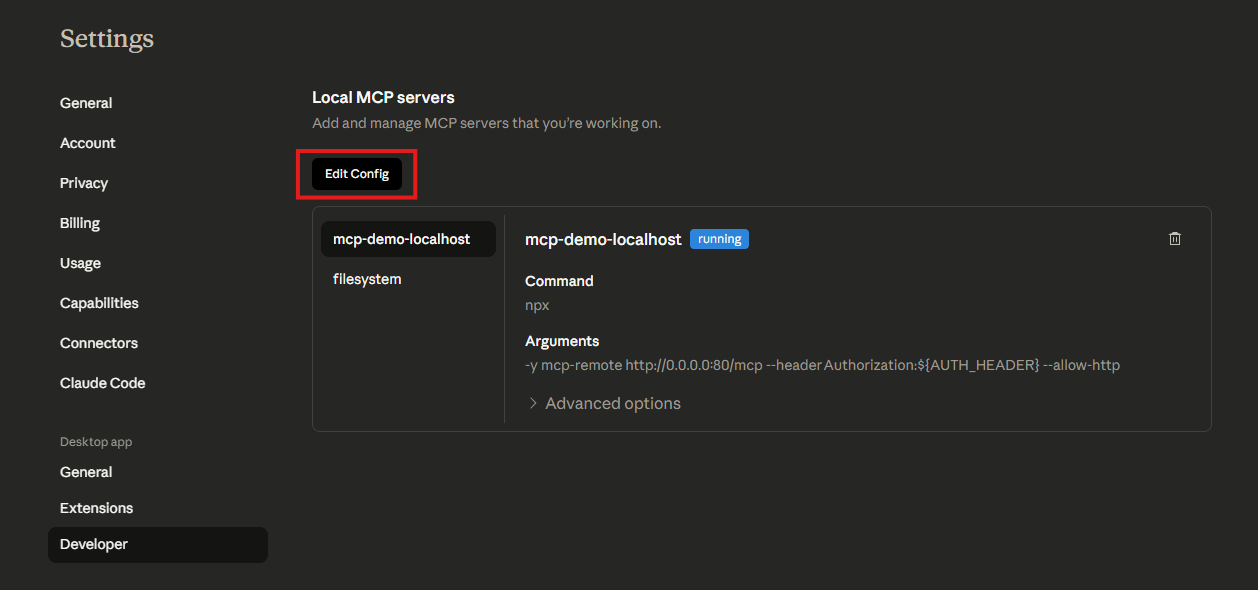

Claude for Desktop

Settings → Developer → Edit config → open claude_desktop_config.json file

update the

mcpServerssectionreplace the

YOUR_API_KEYpart with your actual api key

{

"mcpServers": {

"youcam-api-mcp": {

"command": "npx",

"args": [

"-y",

"mcp-remote",

"https://mcp-api-01.makeupar.com/mcp",

"--header",

"Authorization:${AUTH_HEADER}"

],

"env": {

"AUTH_HEADER": "Bearer YOUR_API_KEY"

}

}

}

}

- File → Exit to restart claude desktop app

YouCam AI Capabilities Exposed via MCP

Below is a categorical overview of the APIs available on the youcam-api-mcp server. Each entry indicates whether the operation can be invoked directly or requires a template_id obtained from a dedicated "templates" endpoint.

Skin and Face

| API | Execution Type | Brief Description |

|---|---|---|

| AI‑Skin‑Analysis | Direct | Analyses skin texture, pigmentation, hydration, pores, etc., and returns personalized skincare recommendations. |

| AI‑Aging‑Generator | Direct | Generates a series of age-progressed images from a single selfie (youth → older). |

| AI‑Skin‑Tone‑Analysis | Direct | Detects facial skin tone together with eye, eyebrow, lip & hair colours for colour‑matching recommendations. |

| AI‑Face‑Analyzer | Direct | Examines facial geometry (eye shape, nose, cheekbones, etc.) to drive custom beauty or product suggestions. |

Beauty API

| API | Execution Type | Brief Description |

|---|---|---|

| AI‑Makeup‑Virtual‑TryOn | Direct (Pattern‑Based**) | Applies professionally designed makeup virtual try-on; first retrieve available pattern names via AI‑Makeup‑Virtual‑Try‑On‑Pattern‑Name. |

| AI‑Makeup‑Virtual‑Try‑On‑Pattern‑Name | Template/Pattern Query | Returns a list of predefined makeup patterns (pattern_id). |

| AI‑Look‑Vto | Direct (Template‑Based*) | Applies curated “look” styles to an image; retrieve style IDs via AI‑Look‑Virtual‑TryOn‑Templates. |

| AI‑Look‑Virtual‑TryOn‑Templates | Template/Pattern Query | Lists available look templates (template_id). |

* Template‑based APIs require a preceding call to the corresponding “templates” endpoint to obtain an identifier that is then supplied in the execution request.

** The AI Makeup API supports the following effects: SkinSmoothEffect, BlushEffect, BronzerEffect, ConcealerEffect, ContourEffect, EyebrowsEffect, EyelinerEffect, EyeshadowEffect, EyelashesEffect, FoundationEffect, HighlighterEffect, LipColorEffect and LipLinerEffect. Each effect has its own JSON structure. Some include a pattern, others may include a texture, and most contain at least one colour parameter within a palette structure.

It is recommended to copy the sample code for the makeup effect you wish to apply from AI-Makeup-Vto/Inputs-and-Outputs and include the pattern JSON when sending to the LLM. This ensures the LLM understands what AI Makeup supports and what you intend to apply.

For example, a lipstick sample effect is shown below:

{

"category": "lip_color", // string, const "lip_color"

"shape": { // object, driven by lipshape.json

"name": "original" // string, must match a label from lipshape.json

},

"morphology": { // optional object

"fullness": 50, // integer, range 0 to 100 (default 0)

"wrinkless": 50 // integer, range 0 to 100 (default 0)

},

"palettes": [ // minimum items depend on style; often one or more

{

"color": "#ff0000", // string, hex colour "#RRGGBB"

"texture": "matte", // string, enum ["matte","gloss","holographic","metallic","satin","sheer","shimmer"]

"colorIntensity": 50, // integer, range 0 to 100

"gloss": 50, // integer, required if texture is gloss, holographic, metallic, sheer or shimmer

"shimmerColor": "#ff0000", // string, required if texture is holographic, metallic or shimmer

"shimmerIntensity": 50, // integer, required if texture is holographic, metallic or shimmer

"shimmerDensity": 50, // integer, required if texture is holographic, metallic or shimmer

"shimmerSize": 50, // integer, required if texture is holographic, metallic or shimmer

"transparencyIntensity": 50 // integer, required if texture is gloss, sheer or shimmer

}

],

"style": {

"type": "full", // string, enum ["full","ombre","twoTone"]

"innerRatio": 50, // integer, required if type is ombre

"featherStrength": 50 // integer, required if type is ombre

}

}

The lipstick pattern JSON can be found in the Full Pattern Catalogue at: https://plugins-media.makeupar.com/wcm-saas/shapes/lipshape.json This is also available in AI-Makeup-Vto/Inputs-and-Outputs within the API documentation.

Fashion API

| API | Execution Type | Brief Description |

|---|---|---|

| AI-Cloth | Direct | Virtual clothing try-on. |

| AI-Cloth-Templates | Template/Pattern Query | Queries predefined clothing templates. |

| AI-Fabric | Direct | Generates or analyses fabrics. |

| AI-Fabric-Templates | Template/Pattern Query | Retrieves fabric templates. |

| AI-Bag | Direct | Virtual bag try-on or placement. |

| AI-Scarf | Direct | Virtual scarf try-on. |

| AI-Shoes | Direct | Virtual shoes try-on. |

| AI-Hat | Direct | Virtual hat try-on. |

Jewellery and Watch

| API | Execution Type | Brief Description |

|---|---|---|

| AI-Ring-Virtual-Try-On | Direct | Virtual ring fitting and preview. |

| AI-Bracelet-Virtual-Try-On | Direct | Virtual bracelet try-on. |

| AI-Watch-Virtual-Try-On | Direct | Virtual watch fitting. |

| AI-Earring-Virtual-Try-On | Direct | Virtual earring try-on. |

| AI-Necklace-Virtual-Try-On | Direct | Virtual necklace try-on. |

Hair API

| API | Execution Type | Brief Description |

|---|---|---|

| AI‑Hairstyle‑Generator | Direct | Generates realistic hairstyles; can also use predefined styles from AI‑Hairstyle‑Generator‑Templates. |

| AI‑Hairstyle‑Generator‑Templates | Template/Pattern Query | Lists hairstyle template IDs. |

| AI‑Hair‑Color | Direct | Changes hair colour with adjustable intensity sliders. |

| AI‑Hair‑Extension | Direct | Simulates extensions of various lengths and styles; requires a template_id from AI‑Hair‑Extension‑Templates. |

| AI‑Hair‑Extension‑Templates | Template/Pattern Query | Provides extension style IDs. |

| AI‑Hair‑Volume‑Generator | Direct | Adds natural volume to fine or thinning hair; optional presets via AI‑Hair‑Volume‑Generator‑Templates. |

| AI‑Hair‑Volume‑Generator‑Templates | Template/Pattern Query | Lists volume‑enhancement template IDs. |

| AI‑Hair‑Bang‑Generator | Direct | Applies realistic bangs; predefined bang styles available via AI‑Hair‑Bang‑Generator‑Templates. |

| AI‑Hair‑Bang‑Generator‑Templates | Template/Pattern Query | Returns bang style IDs. |

| AI‑Wavy‑Hair | Direct | Generates wavy or curly hair effects; preset wave patterns from AI‑Wavy‑Hair‑Templates. |

| AI‑Wavy‑Hair‑Templates | Template/Pattern Query | Lists wavy‑hair template IDs. |

| AI‑Beard‑Style‑Generator | Direct | Simulates a variety of beard styles; additional preset options via AI‑Beard‑Style‑Generator‑Templates. |

| AI‑Beard‑Style‑Generator‑Templates | Template/Pattern Query | Provides beard style IDs. |

| AI‑Hair‑Frizziness‑Detection | Direct | Quantifies hair frizz level from three‑view photos (front, left, right). |

| AI‑Hair‑Length‑Detection | Direct | Measures hair length and categorises it into predefined ranges. |

| AI‑Hair‑Type‑Detection | Direct | Identifies curl pattern, thickness and overall hair type. |

* Template‑based APIs require you to first request the list of available templates, select a template_id, then invoke the main endpoint with that identifier.

Image Editing

| API | Execution Type | Brief Description |

|---|---|---|

| AI-Photo-Lighting | Direct | Enhances or corrects lighting. |

| AI-Photo-Background-Removal | Direct | Removes backgrounds. |

| AI-Object-Removal-Pro | Direct | Advanced object removal. |

| AI-Object-Removal | Direct | Regular object removal. |

| AI-Photo-Enhance | Direct | Improves image quality. |

| AI-Photo-Colorize | Direct | Adds colour to black-and-white images. |

| AI-Photo-Color-Correction | Direct | Adjusts colour balance. |

| AI-Replace | Direct | Replaces selected image regions. |

| AI-Image-Extender | Direct | Extends image borders (outpainting). |

| AI-Headshot-Generator | Direct | Creates headshots. |

| AI-Headshot-Generator-Templates | Template/Pattern Query | Headshot style templates. |

| AI-Studio-Generator | Direct | Generates studio-style portraits. |

| AI-Studio-Generator-Templates | Template/Pattern Query | Studio look templates. |

| AI-Avatar-Generator | Direct | Generates avatars. |

| AI-Avatar-Generator-Templates | Template/Pattern Query | Avatar style templates. |

| AI-Makeup-Transfer | Direct | Transfers makeup between faces. |

| AI-Face-Swap | Direct | Swaps faces in photos. |

| AI-Face-Swap-Face-Detection | Direct | Detects faces specifically for swapping. |

Video Editing

| API | Execution Type | Brief Description |

|---|---|---|

| AI-Video-Enhance | Direct | Enhances video quality. |

| AI-Face-Swap-Video | Direct | Swaps faces in video. |

| AI-Style-Transfer-Video | Direct | Applies artistic style to video. |

| AI-Style-Transfer-Video-Templates | Template/Pattern Query | Queries built-in video style templates. |

Utility

| API | Execution Type | Brief Description |

|---|---|---|

| Get‑Running‑Task‑Status | Direct | Checks the status and results of a previously started asynchronous task using its task_id. This endpoint is used internally by MCP‑aware clients for polling; developers typically do not call it directly. |

Asynchronous Task Management (Utility API)

All AI APIs run asynchronously:

- The initial request returns a

task_id. - The client automatically polls

Get-Running-Task-Statusuntil the task reaches a terminal state (completed,failed).

It is useful to retrieve a result within the retention period once it has completed and the polling has timed out.

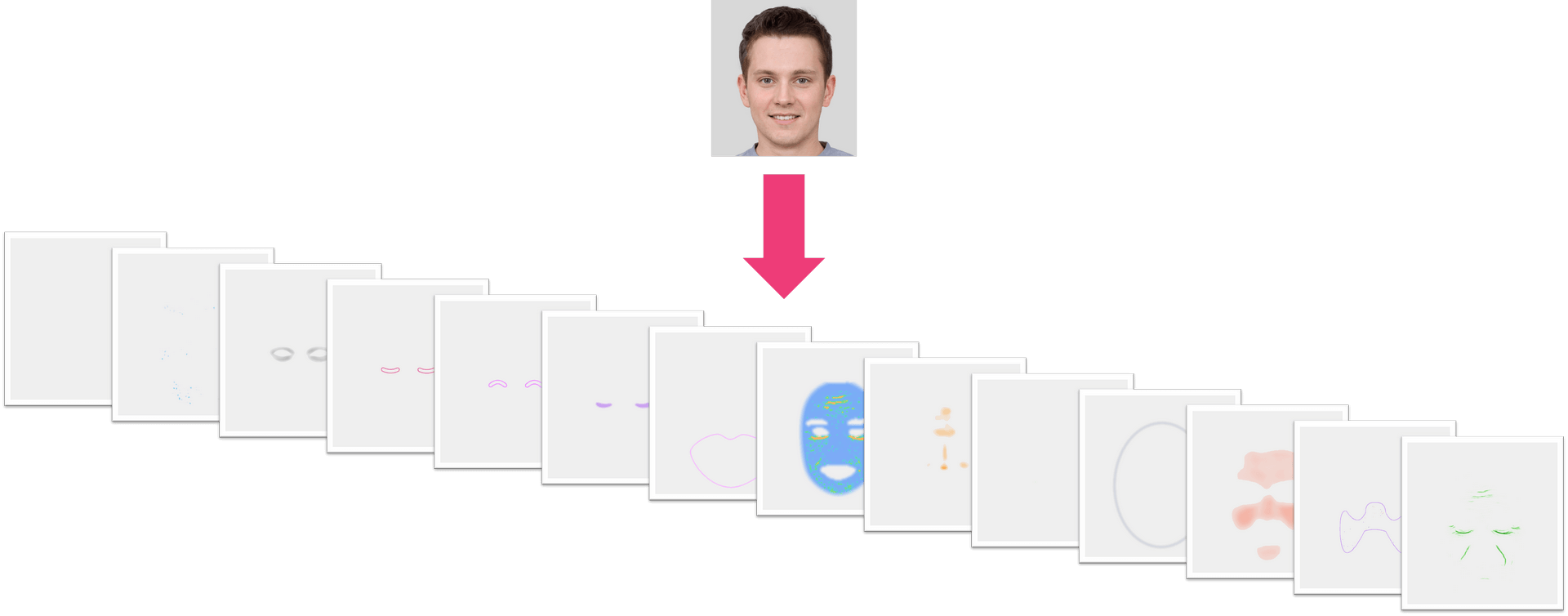

AI Skin Analysis

AI skincare analysis technology harnesses the power of artificial intelligence to analyze various aspects of the skin, from texture and pigmentation to hydration and pore size, with remarkable precision. By employing advanced algorithms and machine learning, AI skin analysis can offer personalized recommendations and skincare routines tailored to an individual's unique skin type and concerns.

AI skincare analysis technology harnesses the power of artificial intelligence to analyze various aspects of the skin, from texture and pigmentation to hydration and pore size, with remarkable precision. By employing advanced algorithms and machine learning, AI skin analysis can offer personalized recommendations and skincare routines tailored to an individual's unique skin type and concerns.

This not only enhances the effectiveness of skincare products but also empowers users to make informed decisions about their skincare regimen. With the integration of AI skin analysis, individuals can now embark on a journey towards healthier, more radiant skin, guided by data-driven insights and the promise of more effective skincare solutions.

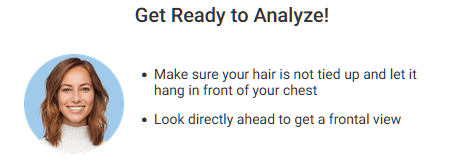

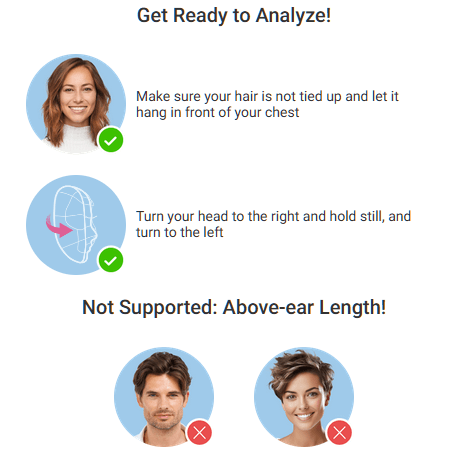

How to Take Photos for AI Skin Analysis

- Take a selfie facing forward

- Just one clear shot, looking straight into the camera. Leave your hair down so it falls over your chest, and make sure you're staring directly ahead for that front-on view.

- Instead, use the JS Camera Kit to take a photo. Just leave your hair down so it falls over your chest. Don't tie it up.

Workflow

Skin Analysis API Usage Guide This guide explains how to upload an image and create a skin analysis task using the File API and AI Task API.

Step 1: Resize your source image

Resize your photo to fit the supported dimensions - up to 4096 pixels on the long side and at least 480 pixels on the short side for SD, or up to 4096 pixels on the long side and at least 1080 pixels on the short side for HD. See details in File Specs & Errors

Step 2: Upload File Metadata via File API

- Image Requirements

- See details in File Specs & Errors

Send a POST request to initialise the file upload:

curl --request POST \

--url https://yce-api-01.makeupar.com/s2s/v2.0/file/skin-analysis \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"files": [

{

"content_type": "image/png",

"file_name": "skin_analysis_01_3dbd1b6683.png",

"file_size": 547541

}

]

}'

Important: Simply calling the File API does not upload your file. You must additionally upload the file to the URL provided in the File API response. That URL is your upload destination, make sure the file is successfully transferred there before proceeding.

Warning: Please note that, you will get an 500 Server Error / unknown_internal_error or 404 Not Found error when using AI APIs if you do not upload the file to the URL provided in the File API response.

Step 3: Retrieve Upload URL and File ID

The response includes:

requests.url– Pre-signed URL for image upload.file_id– Identifier for creating an AI task.

Example Response:

{

"status": 200,

"data": {

"files": [

{

"content_type": "image/png",

"file_name": "skin_analysis_01_3dbd1b6683.png",

"file_id": "SaGaqpDgKwFrVBgMpQMA3HY0LeqdT9/13W5TOD8/u/FfjK3xgCQ+hRt9MJXBFaud",

"requests": [

{

"method": "PUT",

"url": "https://yce-us.s3-accelerate.amazonaws.com/demo/ttl30/...signature...",

"headers": {

"Content-Length": "547541",

"Content-Type": "image/png"

}

}

]

}

]

}

}

Step 4: Upload Image to Pre-signed URL

Use the provided requests.url and headers:

curl --location --request PUT 'https://yce-us.s3-accelerate.amazonaws.com/demo/ttl30/...signature...' \

--header 'Content-Type: image/png' \

--header 'Content-Length: 547541' \

--data-binary @'./skin_analysis_01_3dbd1b6683.png'

Step 5: Create AI Task

Use the file_id from Step 2 to create a skin analysis task:

curl --request POST \

--url https://yce-api-01.makeupar.com/s2s/v2.0/task/skin-analysis \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"src_file_id": "SaGaqpDgKwFrVBgMpQMA3HY0LeqdT9/13W5TOD8/u/FfjK3xgCQ+hRt9MJXBFaud",

"dst_actions": ["wrinkle", "pore", "texture", "acne"],

"miniserver_args": {

"enable_mask_overlay": true,

"enable_dark_background_hd_pore": true,

"color_dark_background_hd_pore": "3D3D3D",

"opacity_dark_background_hd_pore": 0.4

// Additional parameters omitted for brevity

},

"format": "json"

}'

Once the upload is complete, you can select any skin concerns to analyze using your file ID or image file url. Please refer to the Inputs & Outputs. Subsequently, calling POST 'task/skin-analysis' with the File ID or image file url executes the enhance task and obtains a task_id. Please be advised that simultaneous use of SD and HD skin concern parameters is NOT supported.

- Use an Existing Public Image URL Instead of uploading, you may supply a publicly accessible image URL directly when initiating the AI task.

Example Response:

{

"status": 200,

"data": {

"task_id": "SaGaqpDgKwFrVBgMpQMA3HY0LeqdT9_13W5TOD8_u_GPi6NqQ3dhlmN-6ntFwhzT"

}

}

Step 6: Poll Task Status

Retrieve task results using the task_id:

curl --request GET \

--url https://yce-api-01.makeupar.com/s2s/v2.0/task/skin-analysis/<YOUR_TASK_ID> \

--header 'Authorization: Bearer YOUR_API_KEY' \

--header 'Content-Type: application/json'

This task_id is used to monitor the task's status through polling GET 'task/skin-analysis' to retrieve the current engine status. Until the engine completes the task, the status will remain 'running', and no units will be consumed during this stage.

Processed results are retained for 24 hours after completion.- No need for short-interval polling.- Flexible polling intervals within the 24-hour window.

Important: Polling is still required to check task status, as execution time is not guaranteed.

The task will change to the 'success' status after the engine successfully processes your input file and generates the resulting image. You will get an url of the processed image and a dst_id that allow you to chain another AI task without re-upload the result image.

Your units will only be consumed in this case. If the engine fails to process the task, the task's status will change to 'error' and no unit will be consumed. When deducting units, the system will prioritize those nearing expiration. If the expiration date is the same, it will deduct the units obtained on the earliest date.

Step 7: Interpret Results

The response includes:

ui_score– User-friendly score.raw_score– Raw analysis score.mask_urls– URLs for detection masks.

Example Response:

{

"status": 200,

"data": {

"results": {

"output": [

{

"type": "texture",

"ui_score": 68,

"raw_score": 57.33,

"mask_urls": ["https://yce-us.s3-accelerate.amazonaws.com/...texture_output.jpg"]

},

{

"type": "pore",

"ui_score": 92,

"raw_score": 95.34,

"mask_urls": ["https://yce-us.s3-accelerate.amazonaws.com/...pore_output.jpg"]

}

// Additional results omitted for brevity

]

},

"task_status": "success"

}

}

Debugging Guide

Warning: Please be advised that simultaneous use of SD and HD skin concern parameters is NOT supported. Attempting to deviate from these specifications will result in an InvalidParameters error.

- If you mix using HD and SD skin concerns, you will get an error as following:

{ "status": 400, "error": "cannot mix HD and SD dst_actions", "error_code": "InvalidParameters" } - If you misspell a skin concern or sending unknown skin concerns, you will get an error as following:

{ "status": 400, "error": "Not available dst_action abc123", "error_code": "InvalidParameters" }

Real-world examples:

Input Paramenter Description

There are two options for controlling the visual output of AI Skin Analysis results: either generate multiple images, with each skin concern displayed as an independent mask, or produce a single blended image using the enable_mask_overlay parameter. By default, the system outputs multiple masks, giving you full control over how to blend each skin concern mask with the image.

Default: enable_mask_overlay false

Set enable_mask_overlay to true

Output ZIP Data Structure Description

The system provides a ZIP file with a 'skinanalysisResult' folder inside. This folder contains a 'score_info.json' file that includes all the detection scores and references to the result images.

The 'score_info.json' file contains all the skin analysis detection results, with numerical scores and the names of the corresponding output mask files.

The PNG files are detection result masks that can be overlaid on your original image. Simply use the alpha values in these PNG files to blend them with your original image, allowing you to see the detection results directly on the source image.

File Structure in the Skin Analysis Result ZIP

HD Skincare ZIP

- skinanalysisResult

- score_info.json

- hd_acne_output.png

- hd_age_spot_output.png

- hd_dark_circle_output.png

- hd_droopy_lower_eyelid_output.png

- hd_droopy_upper_eyelid_output.png

- hd_eye_bag_output.png

- hd_firmness_output.png

- hd_moisture_output.png

- hd_oiliness_output.png

- hd_radiance_output.png

- hd_redness_output.png

- hd_texture_output.png

- hd_pore_output_all.png

- hd_pore_output_cheek.png

- hd_pore_output_forehead.png

- hd_pore_output_nose.png

- hd_wrinkle_output_all.png

- hd_wrinkle_output_crowfeet.png

- hd_wrinkle_output_forehead.png

- hd_wrinkle_output_glabellar.png

- hd_wrinkle_output_marionette.png

- hd_wrinkle_output_nasolabial.png

- hd_wrinkle_output_periocular.png

- skinanalysisResult

SD Skincare ZIP

- skinanalysisResult

- score_info.json

- acne_output.png

- age_spot_output.png

- dark_circle_v2_output.png

- droopy_lower_eyelid_output.png

- droopy_upper_eyelid_output.png

- eye_bag_output.png

- firmness_output.png

- moisture_output.png

- oiliness_output.png

- pore_output.png

- radiance_output.png

- redness_output.png

- texture_output.png

- wrinkle_output.png

- skinanalysisResult

JSON Data Structure (score_info.json)

"all": A floating-point value between 1 and 100 representing the general skin condition. A higher score indicates healthier and more aesthetically pleasing skin condition.

"skin_age": AI-derived skin age relative to the general population distribution across all age groups.

Each category contains:

- "raw_score": A floating-point value ranging from 1 to 100. A higher score indicates healthier and more aesthetically pleasing skin condition.

- "ui_score": An integer ranging from 1 to 100. The UI Score functions primarily as a psychological motivator in beauty assessment. We adjust the raw scores to produce more favorable results, acknowledging that consumers generally prefer positive evaluations regarding their skin health. This calibration serves to instill greater confidence in users while maintaining the underlying beauty psychology framework.

- "output_mask_name": The filename of the corresponding output mask image.

Categories and Descriptions

HD Skincare:

- "hd_redness": Measures skin redness severity.

- "hd_oiliness": Determines skin oiliness level.

- "hd_age_spot": Detects age spots and pigmentation.

- "hd_radiance": Evaluates skin radiance.

- "hd_moisture": Assesses skin hydration levels.

- "hd_dark_circle": Analyzes the presence of dark circles under the eyes.

- "hd_eye_bag": Detects eye bags.

- "hd_droopy_upper_eyelid": Measures upper eyelid drooping severity.

- "hd_droopy_lower_eyelid": Measures lower eyelid drooping severity.

- "hd_firmness": Evaluates skin firmness and elasticity.

- "hd_texture": Subcategories[whole]; Analyzes overall skin texture.

- "hd_acne": Subcategories[whole]; Detects acne presence.

- "hd_pore": Subcategories[forehead, nose, cheek, whole]; Detects and evaluates pores in different facial regions.

- "hd_wrinkle": Subcategories[forehead, glabellar, crowfeet, periocular, nasolabial, marionette, whole]; Measures the severity of wrinkles in various facial areas.

SD Skincare:

- "wrinkle": General wrinkle analysis.

- "droopy_upper_eyelid": Measures upper eyelid drooping severity.

- "droopy_lower_eyelid": Measures lower eyelid drooping severity.

- "firmness": Evaluates skin firmness and elasticity.

- "acne": Evaluates acne presence.

- "moisture": Measures skin hydration.

- "eye_bag": Detects eye bags.

- "dark_circle_v2": Analyzes dark circles using an alternative method.

- "age_spot": Detects age spots.

- "radiance": Evaluates skin brightness.

- "redness": Measures skin redness.

- "oiliness": Determines skin oiliness.

- "pore": Measures pore visibility.

- "texture": Analyzes overall skin texture.

Sample score_info.json of HD Skincare

{ "hd_redness": { "raw_score": 72.011962890625, "ui_score": 77, "output_mask_name": "hd_redness_output.png" }, "hd_oiliness": { "raw_score": 60.74365234375, "ui_score": 72, "output_mask_name": "hd_oiliness_output.png" }, "hd_age_spot": { "raw_score": 83.23274230957031, "ui_score": 77, "output_mask_name": "hd_age_spot_output.png" }, "hd_radiance": { "raw_score": 76.57244205474854, "ui_score": 79, "output_mask_name": "hd_radiance_output.png" }, "hd_moisture": { "raw_score": 48.694559931755066, "ui_score": 70, "output_mask_name": "hd_moisture_output.png" }, "hd_dark_circle": { "raw_score": 80.1993191242218, "ui_score": 76, "output_mask_name": "hd_dark_circle_output.png" }, "hd_eye_bag": { "raw_score": 76.67280435562134, "ui_score": 79, "output_mask_name": "hd_eye_bag_output.png" }, "hd_droopy_upper_eyelid": { "raw_score": 79.05348539352417, "ui_score": 80, "output_mask_name": "hd_droopy_upper_eyelid_output.png" }, "hd_droopy_lower_eyelid": { "raw_score": 79.97175455093384, "ui_score": 81, "output_mask_name": "hd_droopy_lower_eyelid_output.png" }, "hd_firmness": { "raw_score": 89.66898322105408, "ui_score": 85, "output_mask_name": "hd_firmness_output.png" }, "hd_texture": { "whole": { "raw_score": 66.3921568627451, "ui_score": 75, "output_mask_name": "hd_texture_output.png" } }, "hd_acne": { "whole": { "raw_score": 59.92677688598633, "ui_score": 76, "output_mask_name": "hd_acne_output.png" } }, "hd_pore": { "forehead": { "raw_score": 79.59770965576172, "ui_score": 80, "output_mask_name": "hd_pore_output_forehead.png" }, "nose": { "raw_score": 29.139814376831055, "ui_score": 58, "output_mask_name": "hd_pore_output_nose.png" }, "cheek": { "raw_score": 44.11081314086914, "ui_score": 65, "output_mask_name": "hd_pore_output_cheek.png" }, "whole": { "raw_score": 49.23978805541992, "ui_score": 67, "output_mask_name": "hd_pore_output_all.png" } }, "hd_wrinkle": { "forehead": { "raw_score": 55.96956729888916, "ui_score": 67, "output_mask_name": "hd_wrinkle_output_forehead.png" }, "glabellar": { "raw_score": 76.7251181602478, "ui_score": 75, "output_mask_name": "hd_wrinkle_output_glabellar.png" }, "crowfeet": { "raw_score": 83.4361481666565, "ui_score": 78, "output_mask_name": "hd_wrinkle_output_crowfeet.png" }, "periocular": { "raw_score": 67.88706302642822, "ui_score": 72, "output_mask_name": "hd_wrinkle_output_periocular.png" }, "nasolabial": { "raw_score": 74.03312683105469, "ui_score": 74, "output_mask_name": "hd_wrinkle_output_nasolabial.png" }, "marionette": { "raw_score": 71.94477319717407, "ui_score": 73, "output_mask_name": "hd_wrinkle_output_marionette.png" }, "whole": { "raw_score": 49.64699745178223, "ui_score": 65, "output_mask_name": "hd_wrinkle_output_all.png" } }, "all": { "score": 75.75757575757575 }, "skin_age": 37 }Sample score_info.json of SD Skincare

{ "wrinkle": { "raw_score": 36.09360456466675, "ui_score": 60, "output_mask_name": "wrinkle_output.png" }, "droopy_upper_eyelid": { "raw_score": 79.05348539352417, "ui_score": 80, "output_mask_name": "droopy_upper_eyelid_output.png" }, "droopy_lower_eyelid": { "raw_score": 79.97175455093384, "ui_score": 81, "output_mask_name": "droopy_lower_eyelid_output.png" }, "firmness": { "raw_score": 89.66898322105408, "ui_score": 85, "output_mask_name": "firmness_output.png" }, "acne": { "raw_score": 92.29713000000001, "ui_score": 88, "output_mask_name": "acne_output.png" }, "moisture": { "raw_score": 48.694559931755066, "ui_score": 70, "output_mask_name": "moisture_output.png" }, "eye_bag": { "raw_score": 76.67280435562134, "ui_score": 79, "output_mask_name": "eye_bag_output.png" }, "dark_circle_v2": { "raw_score": 80.1993191242218, "ui_score": 76, "output_mask_name": "dark_circle_v2_output.png" }, "age_spot": { "raw_score": 83.23274230957031, "ui_score": 77, "output_mask_name": "age_spot_output.png" }, "radiance": { "raw_score": 76.57244205474854, "ui_score": 79, "output_mask_name": "radiance_output.png" }, "redness": { "raw_score": 72.011962890625, "ui_score": 77, "output_mask_name": "redness_output.png" }, "oiliness": { "raw_score": 60.74365234375, "ui_score": 72, "output_mask_name": "oiliness_output.png" }, "pore": { "raw_score": 88.38014125823975, "ui_score": 84, "output_mask_name": "pore_output.png" }, "texture": { "raw_score": 80.09742498397827, "ui_score": 76, "output_mask_name": "texture_output.png" }, "all": { "score": 75.75757575757575 }, "skin_age": 37 }

Supported Formats & Dimensions

| AI Feature | Supported Dimensions | Supported File Size | Supported Formats |

|---|---|---|---|

| SD Skincare | long side <= 4096, short side >= 480 | < 10MB | jpg/jpeg/png |

| HD Skincare | long side <= 4096, short side >= 1080 | < 10MB | jpg/jpeg/png |

Warning: It is your resposibility to resize input images based on the Supported Dimensions of HD or SD Skincare before runing AI Skin Analysis. It is highly recommended to use portrait rather than landscape aspect ratio as input.

Suggestions for How to Shoot:

Get Ready to Start Skin Analysis Instructions

- Take off your glasses and make sure bangs are not covering your forehead

- Make sure that you’re in a well-lit environment

- Remove makeup to get more accurate results

- Look straight into the camera and keep your face in the center

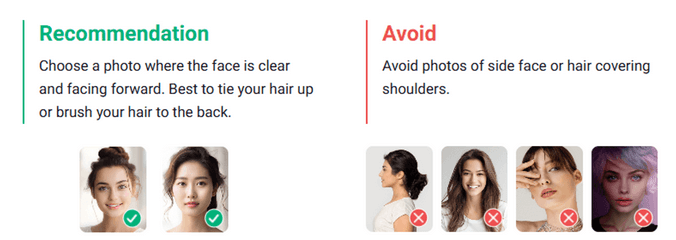

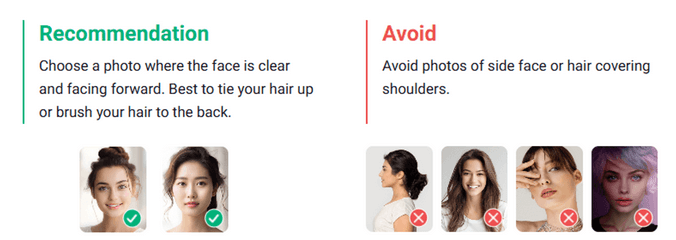

Photo requirement

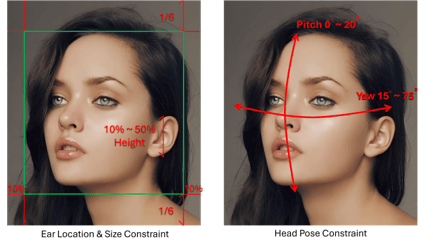

We will check the image quality to ensure it is suitable for AI Skin Analysis. Please make sure the face occupies approximately 60–80% of the image width, without any overlays or obstructions. The lighting should be bright and evenly distributed, avoiding overexposure or blown-out highlights. The pose should be front-facing, neutral, and relaxed, with the mouth closed and eyes open.

You should fully reveal your forehead and brush your fringe back or tie your hair to ensure the best quality. It is recommended that you remove your spectacles for optimal AI Skin Analysis performance, although this is not mandatory.

Warning: The width of the face needs to be greater than 60% of the width of the image.

Error Codes

| Error Code | Description |

|---|---|

| error_below_min_image_size | Input image resolution is too small |

| error_exceed_max_image_size | Input image resolution is too large |

| error_src_face_too_small | The face area in the uploaded image is too small. The width of the face needs to be greater than 60% of the width of the image. |

| error_src_face_out_of_bound | The face area in the uploaded image is out of bound |

| error_lighting_dark | The lighting in the uploaded image is too dark |

Environment & Dependency

| Sample Code Language / Tool | Recommended Runtime Versions |

|---|---|

| cURL | - bash >= 3.2 - curl >= 7.58 (modern TLS/HTTP support) - jq >= 1.6 (robust JSON parsing) |

| Node.js (JavaScript) | Node >= 18 (for global fetch) |

| JavaScript | - Chrome / Edge >= 80 - Firefox >= 74 - Safari >= 13.1 |

| PHP | PHP >= 7.4 (for modern TLS/compat), ext-curl (recommended) or allow_url_fopen=On + ext-openssl, ext-json |

| Python | Python >= 3.10 (for f-strings), requests >= 2.20.0 |

| Java | Java 11+ (for HttpClient), Jackson Databind >= 2.12.0 |

Overview

The JavaScript Camera Kit provides a complete in-browser camera solution designed for high-accuracy face-based imaging tasks. It includes:

- Camera permission handling

- Real-time face detection

- Automatic face quality validation (lighting, pose, angle, distance)

- Guided capture UI

- Multi-step capture flows for advanced hair/face tasks

- Support for both base64 and blob output formats

The module is particularly optimized for AI-driven image analysis, such as AI Skin Analysis (SD/HD), AI Face Tone Analysis, and hair-related analysis.

Download the JS Camera Kit

Include the JS Camera Kit SDK via CDN:

https://plugins-media.makeupar.com/v2.2-camera-kit/sdk.js

Once loaded, the SDK installs a global YMK object.

Quick Start Example

The following sample demonstrates:

- Loading the JS Camera Kit SDK

- Implementing

window.ymkAsyncInit - Initializing the module

- Opening the camera

- Receiving captured images

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Camera Kit Sample</title>

</head>

<body>

<script>

window.ymkAsyncInit = function() {

YMK.addEventListener('loaded', function() {

/* Module fully loaded and ready */

});

YMK.addEventListener('faceDetectionCaptured', function(capturedResult) {

/* Display all captured images */

const container = document.getElementById('captured-results');

container.innerHTML = '';

for (const image of capturedResult.images) {

const img = document.createElement('img');

img.src = typeof image.image === 'string'

? image.image

: URL.createObjectURL(image.image);

container.appendChild(img);

}

});

};

function openCameraKit() {

YMK.init({

faceDetectionMode: 'skincare',

imageFormat: 'base64',

language: 'enu',

});

YMK.openCameraKit();

}

</script>

<!-- Load SDK -->

<script>

window.addEventListener('load', function() {

(function(d) {

const s = d.createElement('script');

s.type = 'text/javascript';

s.async = true;

s.src = 'https://plugins-media.makeupar.com/v2.2-camera-kit/sdk.js';

d.getElementsByTagName('script')[0].parentNode.insertBefore(s, null);

})(document);

});

</script>

<button onClick="openCameraKit()">Open Camera Kit</button>

<div id="YMK-module"></div>

<h3>Captured Results:</h3>

<div id="captured-results"></div>

</body>

</html>

Prerequisites

You must define the asynchronous initialization entry point:

<script>

window.ymkAsyncInit = function() {

YMK.init(); // default settings

};

</script>

Additional requirements:

| Requirement | Description |

|---|---|

| Browser | Must support getUserMedia |

| HTTPS | Required on most browsers for webcam access |

<div id="YMK-module"> |

Mandatory mount point for the UI |

Integration Guide

Step 1 — Initialize the Module

Call YMK.init() before calling YMK.openCameraKit():

YMK.init({

faceDetectionMode: 'skincare',

imageFormat: 'base64',

language: 'enu'

});

Supported Detection Modes

| Mode | Description |

|---|---|

makeup |

Standard camera mode for virtual cosmetic try-on |

skincare |

Standard skin analysis mode, close-up face capture |

hdskincare |

HD Skin capture using webcams with ≥ 2560px width |

shadefinder |

Skin Tone Analysis front-face capture |

facereshape |

AI Face Reshape capture |

hairlength |

Full hair-length capture (from a distance) |

hairfrizziness |

3-phase capture: front, right-turn, left-turn |

hairtype |

Same 3-phase multi-angle capture flow |

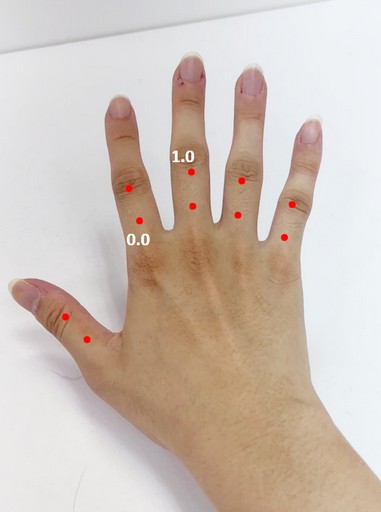

ring |

Hand capture for ring try‑on |

wrist |

Wrist capture for watch or bracelet try‑on |

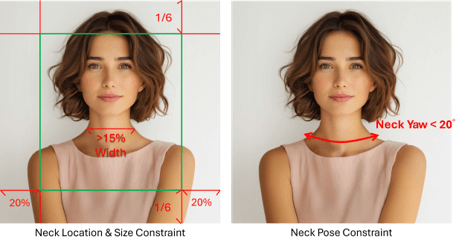

necklace |

Selfie capture for necklace try-on |

earring |

Selfie capture for earring try-on |

Step 2 — Add Event Handlers

Event examples:

YMK.addEventListener('faceQualityChanged', function(q) {

console.log('Quality updated:', q);

});

See the full Events List below.

Step 3 — Open Camera Kit

YMK.openCameraKit();

This automatically:

- Shows the UI

- Opens webcam

- Begins real-time face quality monitoring

- Automatically captures when conditions are acceptable

Step 4 — Receiving Captured Result

Captured images arrive via:

YMK.addEventListener('faceDetectionCaptured', function(result) {

console.log(result.images);

});

Step 5 — Close Module

YMK.close();

API Reference

YMK.init(args)

Configures module appearance, detection mode, language, and capture format.

Arguments

args.faceDetectionMode

Detection flow to use.

Values:

"makeup" | "skincare" | "hdskincare" | "shadefinder" | "facereshape" | "hairlength" | "hairfrizziness" | "hairtype" | "ring" | "wrist" | "necklace" | "earring"

Default: "skincare"

args.width

Pixel width of module container. Default:

360(screens ≥ 500px)screen width(screens < 500px)

Allowed: 300–1920

args.height

Pixel height of module container. Default:

480(screens ≥ 500px)min(screen.height, innerHeight)for smaller screens

Allowed: 300–1920

args.language

UI language.

Available: "chs", "cht", "deu", "enu", "esp", "fra", "jpn", "kor", "ptb", "ita"

Default: "enu"

args.imageFormat

Format returned via faceDetectionCaptured.

"base64" or "blob"

Default: "base64"

args.disableCameraResolutionCheck

Allow running even if webcam does not meet required resolution.

Default: false

Other API Methods

YMK.openCameraKit()

Opens the module and begins detection.

YMK.addEventListener(eventName, callback)

Registers event callbacks.

Returns: EventListenerIdentifier

YMK.removeEventListener(id)

Removes listener by identifier.

YMK.isLoaded()

Returns whether livestream or photo is drawn on canvas.

Returns: boolean

YMK.pause()

Pauses the webcam stream.

YMK.resume(restartWebcam = false)

Resumes webcam after pause.

YMK.getInfo()

Returns current module info:

{

"fps": 30

}

YMK.close()

Closes module and camera.

Events Reference

Lifecycle Events

| Event | Description |

|---|---|

opened |

Module opened |

loading |

Loading progress (0–100) |

loaded |

Camera stream loaded onto canvas |

closed |

Module closed |

Camera Events

| Event | Description |

|---|---|

cameraOpened |

Webcam opened |

cameraClosed |

Webcam closed |

cameraFailed |

Permission denied or no webcam Error code:* "error_resolution_unsupported"* "error_permission_denied"* "error_access_failed" |

faceQualityChanged

Fires continuously during detection.

{

"hasFace": true,

"position": "good",

"frontal": "good",

"lighting": "ok"

}

- Field Descriptions

| Field | Values | Meaning |

|---|---|---|

hasFace |

true/false |

Whether face is detected |

position |

"good", "notgood", "toosmall", "outofboundary" |

Face distance/size quality |

frontal |

"good", "notgood" |

Whether user is facing forward |

lighting |

"good", "ok", "notgood" |

Lighting strength |

Necklace/Earring

| Field | Values | Meaning |

|---|---|---|

hasFace |

true/false |

Whether face is detected |

pose |

"good", "notgood" |

Face pose quality |

size |

"good", "notgood" |

Face size quality |

Wrist/Ring

| Field | Values | Meaning |

|---|---|---|

hasFace |

true/false |

Whether face is detected |

position |

"good", "notgood" |

Hand position quality |

pose |

"good", "notgood" |

Hand pose quality |

Minimum Recommended Quality

const minQuality = {

hasFace: true,

area: "good",

frontal: "good",

lighting: "ok"

};

faceDetectionStarted

User enters detection UI.

faceDetectionCaptured

This event is fired after all required face quality validation checks have passed and the Camera Kit has successfully completed the capture workflow.

Depending on the selected faceDetectionMode, the event may contain one or multiple captured images (e.g., multi-angle capture for hair modes).

Callback Arguments

capturedResult — Object

The result object containing all successfully captured images and metadata.

Field Definitions

mode — string

Indicates the active faceDetectionMode used for capturing.

Possible values include:

"makeup" | "skincare" | "hdskincare" | "shadefinder" | "facereshape" | "hairlength" | "hairfrizziness" | "hairtype" | "ring" | "wrist" | "necklace" | "earring"

This value corresponds directly to the configuration set in YMK.init({ faceDetectionMode }).

images — Array

ImageObject Structure

| Field | Type | Description |

|---|---|---|

phase |

integer | The zero-based index representing the capture step. Examples: • Skincare = 0 • Hair frizziness sequence = 0 (front), 1 (right turn), 2 (left turn) |

image |

string or Blob | The captured image. • Base64 string if args.imageFormat = "base64" • Binary Blob if args.imageFormat = "blob" |

width |

integer | Pixel width of the captured image. |

height |

integer | Pixel height of the captured image. |

Notes

- The number of images varies depending on

faceDetectionMode.- Example:

"hairfrizziness"and"hairtype"typically produce three images.

- Example:

- Image resolution may differ from device to device.

The actual pixel size depends on:

- The user's webcam maximum resolution

- The constraints and recommended settings for the selected mode

- All images are guaranteed to meet the minimum face quality requirements before this event is dispatched.

Configuration Notes

Quality Requirements

- Ensure correct distance

- Ensure frontal face angle

- Provide sufficient lighting (avoid shadows)

Multi-Phase Capture

Modes like hairtype or hairfrizziness require:

- Front face

- Turn right

- Turn left

Ensure event handling is in place.

Create a new file.

To upload a new file, you'll first need to use the File API. It will give you a URL – use that URL to upload your file. Once the upload is finished, you can use the file_id from the same response to start using our AI features.

Authorizations:

Request Body schema: application/jsonrequired

required | Array of objects |

Responses

Request samples

- Payload

- curl

- Node.js

- JavaScript

- PHP

- Python

- Java

{- "files": [

- {

- "content_type": "image/jpg",

- "file_name": "my-selfie.jpg",

- "file_size": 50000

}

]

}Response samples

- 200

- 400

- 401

{- "status": 200,

- "data": {

- "files": [

- {

- "content_type": "image/jpg",

- "file_name": "my-selfie.jpg",

- "file_id": "U8aqJbsXGT537jtGnEDFHqxdDXqh8+oTF/cSkLimzuvVwMP+Jb1XbjPsf7ZgUgLY",

- "requests": [

- {

- "headers": {

- "Content-Type": "image/jpg",

- "Content-Length": 50000

}, - "method": "PUT"

}

]

}

]

}

}Run a Skin Analysis task.

Once you start an AI task, you need to keep polling at given polling_interval to check its status until it shows either success or error because if you don't, the task will time out and when you try to check the status later, you'll get an InvalidTaskId error even if the task did finish successfully and your units will still be consumed.

Authorizations:

Request Body schema: application/jsonrequired

| src_file_url required | string Url of the file to run task. The url should be publicly accessible. |